Introduction The captivating realm of artificial intelligence includes Reinforcement Learning (RL), which is truly fascinating. It enables agents to learn from their own actions and rewards in complex and dynamic environments. Despite the great promise demonstrated by RL, Additionally, it encounters difficulties like sparse rewards, exploration-exploitation trade-offs, and generalization to unfamiliar scenarios. To tackle these

Introduction

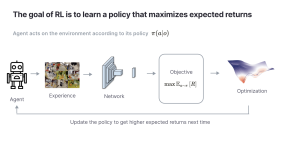

The captivating realm of artificial intelligence includes Reinforcement Learning (RL), which is truly fascinating. It enables agents to learn from their own actions and rewards in complex and dynamic environments. Despite the great promise demonstrated by RL, Additionally, it encounters difficulties like sparse rewards, exploration-exploitation trade-offs, and generalization to unfamiliar scenarios. To tackle these issues and enhance the explainability and interpretability of RL agents, experts are examining the potential of internal drive.

Innate motivation and the thirst for knowledge within the framework of RL in RL

The drive to perform activities solely for their own sake stems from intrinsic motivation and doesn’t require external rewards or punishments. To encourage RL agents in their quest for new experiences and combatting uncertainty and boredom, curiosity serves as a fundamental form of intrinsic motivation. By leveraging intrinsic motivation and curiosity, The limitations presented by extrinsic rewards, such as sparsity, delay, and ambiguity can be overcome by RL agents. Moreover, they can improve learning efficiency, adaptability, and robustness.

Image by: https://www.anyscale.com/blog/the-reinforcement-learning-framework

Why Explainability and Interpretability Matter in RL

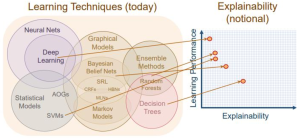

In the realm of AI, explainability and interpretability are crucial aspects. It denotes the aptitude of an agent to grasp and articulate its process of making decisions and behavior. The concept of explainability relates to how well an agent can offer logical and comprehensible justifications for its actions and results. Interpretability, on the contrary, pertains to how much an agent’s internal state and reasoning can be examined and deduced by humans or other entities. Establishing trust, transparency, and accountability in RL systems requires both explainability and interpretability. This fact is particularly emphasized when they interact with humans or operate in safety-critical domains.

Image by: https://www.semanticscholar.org/paper/Explainability-vs-.-Interpretability-and-methods-%E2%80%99-Tran-Draheim/db9f82b4647a85bb1b630dd313d729af3cec58db

Improving the Understandability of RL through Inherent Motivation

By incorporating intrinsic motivation, explainability in RL can be significantly boosted by providing supplementary feedback and guidance while the agent learns. For instance, intrinsic rewards can signal the agent’s progress, interest, and satisfaction, providing valuable insights into why the agent made specific decisions. Furthermore, intrinsic motivation can support the agent in creating natural language justifications for its behavior through connecting actions with internal states, beliefs, and goals. The alignment of the agent’s interests and preferences with those of its human partners also Promotes efficient communication and collaboration between humans and agents.

Image by: https://link.springer.com/chapter/10.1007/978-3-642-32375-1_2

Increasing Understandability with Inherent Motivation in Reinforcement Learning when it comes to RL

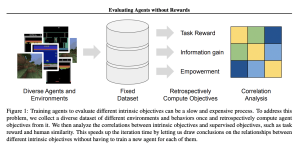

Influencing an agent’s representation and generalization of the environment is a key function of intrinsic motivation, Consequently, interpretability in RL is enhanced. By urging the agent to gain diverse and valuable features, This intrinsic motivation empowers the agent to provide more accurate descriptions and predictions of its surroundings. Additionally, the agent’s intrinsic motivation helps with uncovering hidden patterns and consistencies in the surroundings, leading to simplified and generalized policies. In addition, the ability to transfer and adapt knowledge and skills to new situations contributes to enhancing the agent’s performance evaluation and comparison.

image by: https://www.marktechpost.com/2021/01/03/researchers-examine-three-intrinsic-motivation-types-to-stimulate-intrinsic-objectives-of-reinforcement-learning-rl-agents/

Issues and Possibilities for Innate Motivation in RL

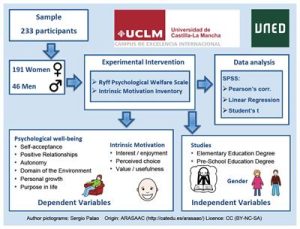

Despite the vast potential intrinsic motivation offers for enhancing explainability and interpretability in RL, Nevertheless, there are certain challenges and limitations associated with intrinsic motivation that demand careful attention. Creating and establishing internal motivation systems that harmonize with external incentives, achieving equilibrium between inherent drive and external incentives, examining and judging the consequences of intrinsic motivators on learning outcomes and behavior, and guaranteeing moral consequences are considered as part of the difficulties confronted by researchers. These challenges Necessitate thorough thinking and deliberate strategies. Exciting opportunities for further research and innovation in RL arise from these challenges.

Image by: https://www.frontiersin.org/articles/10.3389/fpsyg.2020.02054/full

Different Resources and Tools for Intrinsic Motivation pertaining to RL

If you have a keen interest in exploring intrinsic motivation and curiosity in RL, or for individuals who wish to utilize them for their personal RL endeavors, several resources and tools are available. Obtaining valuable insights is possible through seminal papers and surveys on intrinsic motivation and curiosity in RL, such as [1], [2], and [3]. Moreover, open-source frameworks and libraries that implement intrinsic motivation and curiosity algorithms, like Practical implementations are offered by references like [4], [5], and [6] Taking part in discussions within online communitiesandplatforms dedicated to exploring intrinsic motivationandcuriosityinRL, including[7],[8],[9]can facilitate collaborative learningandexploration. These communities create an environment where individuals can connect and communicate with others who have similar interests, enabling the sharing of knowledge and the chance to gain insights from different experiences.

Conclusion

In essence, intrinsic motivation represents a robust approach in order to boost the understanding and interpretive capabilities of RL agents. Through the cultivation of curiosity and offering extra feedback, The improvement of learning efficiency, adaptability, and overall performance is possible through intrinsic motivation. Moreover, it can enable RL agents to communicate more effectively with humans, boosting trust and transparency levels. While challenges exist, There are thrilling prospects due to the continuous research and the accessibility of resources and tools for a more profound understanding of AI systems by maximizing intrinsic motivation in RL.

Leave a Comment

Your email address will not be published. Required fields are marked with *